How to conduct a basic SEO audit

If performing a search engine optimisation (SEO) audit of your website sounds too complicated, too technical, or like something you believe only a few experts could tackle, think again.

Knowing how to assess your website is a great skill to possess. And with so much information available on the topic it has become easy and practical for anyone to perform an SEO audit for a website.

So if you’re a one or a two-man team and want to learn how to conduct a basic audit for your small business website, this guide covers everything you need to ensure your site is always in top shape.

Plus, it’ll also save you about £400 to £1,300 because that’s how much a basic SEO audit can cost you. So put that money back into your pocket and let’s get started.

But before we get into how to perform an SEO audit, let’s first explain what it is and what you can expect from it.

What is an SEO audit?

An SEO audit helps you evaluate the health of various areas of your website. It’s like a checklist looking at things including:

- Are titles unique for each page and within the correct length?

- Is my content unique?

- Is my content properly formatted using headings?

- Are my web pages loading fast enough?

This guide will walk you through all the steps you need to follow to determine how well your site is performing and what you can do to make it even better.

What to expect from an SEO audit?

You should expect three things:

- A description of the current state of your website. You get to see how your site is performing in search, social media and more.

- A list of actions and recommendations that you can use to improve your site.

- Potential sources of traffic and marketing opportunities that you can take advantage of to promote your brand.

How often does your site need an SEO audit?

This isn’t a one-time thing. You’ll need to perform regular SEO audits once or twice a year to ensure everything works smoothly and that your site is up-to-date with the latest developments. With things changing so often in the SEO industry, it’s important to understand that what works today may not work six months from now.

In addition:

- Algorithms are constantly updated and so are webmaster guidelines

- Content gets outdated quickly

- You may get website errors, including broken links, which you may not be aware of and this can cause you to lose traffic

- It will keep you warned of negative SEO, poor sites linking to your site or suspicious linking patterns on your site that can harm your SEO

- You’ll be able to quickly identify the reasons for a possible ranking or traffic drop in search engine results

While this task may seem daunting, I promise it gets easier each year.

Ok, now that you know what an SEO audit is and why it’s important, let’s move on to the fun part.

How to perform your own SEO Audit

To make this guide easier to go through, I’ve structured it into six sections, as follows:

- Accessibility

- Indexability

- On-page analysis

- Backlinks

- Social media analysis

- Competitive analysis

1. Accessibility

One of the most important things that you need to check is whether your content is accessible to search engines. You want to make sure that search engines are able to crawl your site. If something is stopping them from accessing your site, it’ll be like it doesn’t even exist.

Let’s see how you can do that.

Robots.txt

This file tells search engines which pages they’re not allowed to crawl and index. So, make sure you check this file to ensure you’ve not blocked any important pages from being crawled.

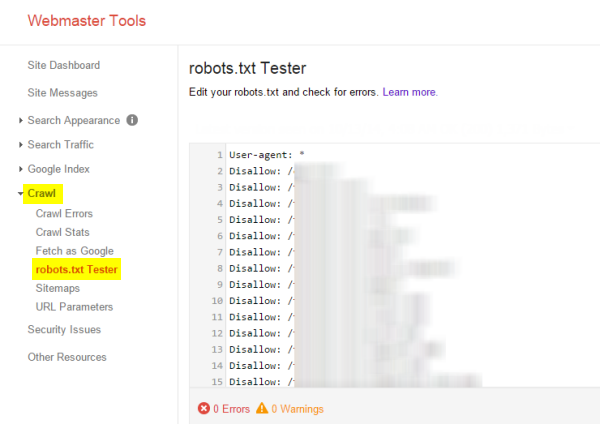

To manually check the robots.txt files and ensure it’s not restricting access to important sections of your site, go to your Google Webmaster Tools (GWT) account -> Crawl -> robots.txt Tester.

This is where you’ll see the URLs that are being blocked by the file:

There are situations when you want to add specific pages to the robots.txt files to prevent them from being crawled. Here’s a great guide that explains how to create your own robots.txt file.

HTTP Status Codes

Broken links are bad for user experience and can also affect your SEO.

So if search engines and users are trying to access pages on your site but all they see are 404, 408, 500, 502 errors, you need to fix those issues right away.

2xx, 3xx, 4xx, 5xx errors are also known as HTTP status codes. If, for example, your web server returns an HTTP status code with a value 200, it means that everything is OK. However, if it returns HTTP status codes of 4xx or 5xx, it means something went wrong which is preventing search engines as well as users from accessing your page content.

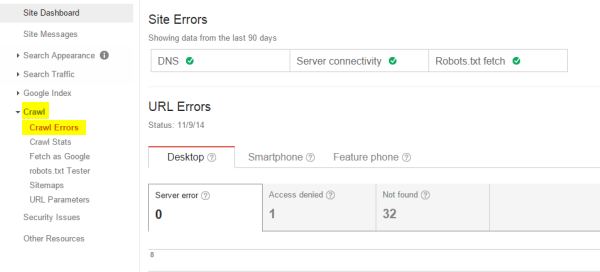

The easiest way to check if you have pages that return errors is to log in to your Google Webmaster Tools account and go to Health -> Crawl Errors report.

Here you can click on the three tabs to see a list of the pages/URLs on your site that Google has identified the specified error on. Errors are also categorised by the type of device to make it easier to spot desktop or mobile errors only. Make sure you check GWT regularly for these errors and, if you find any, try to resolve them as soon as possible.

A common scenario is when an old page is deleted and a new one is created to take its place and it turns into a 404 not found error when people try to access it. So make sure that when you move a page you do a 301 redirect. This will send visitors to the new location and will also tell search engines that the page has permanently moved. You can learn more about 301 redirects in point four of this article.

Consolidating www and non-www

Speaking of redirection, don’t forget to consolidate the www and non-www versions of your domain. If you don’t do this you’re basically telling search engines to keep two copies of your site in their index which will also split the link authority between them. You don’t want this to happen.

So just pick the URL version you prefer and always use that format, whether it’s on your site, on Twitter or in a guest blog on another website. Then create a 301 redirect or add canonical tags to tell Google which version of the site it should pay attention to.

XML Sitemap

Sitemaps are like a roadmap for search engines. They include every page on your site, making sure search engine bots don’t miss any important web pages or pieces of content on your site.

You can use tools like the XML Sitemap Creator to automatically create a sitemap. Once your XML sitemap is created, you should submit it to Google Webmaster Tools and Bing so that search engines can easily crawl and index your website.

Make sure that your Sitemap follows the Sitemap protocol, that it’s readable and up-to-date.

Additional resources:

Site Performance

It doesn’t matter how awesome your site’s design and content are, and it doesn’t matter if your site is at the top of the search results for a very competitive keyword if it’s taking too long to load.

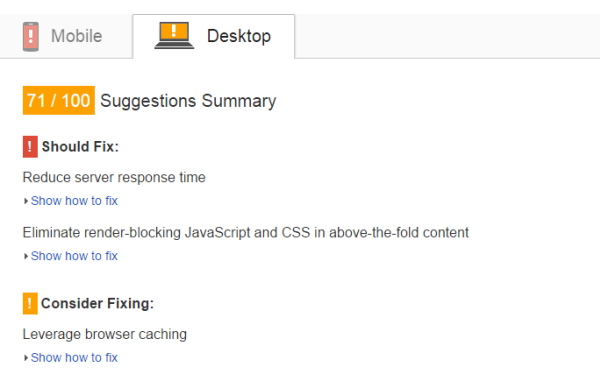

Why? Users have little patience and if your site is slow, meaning if it takes more than ten seconds for a page to load, they’ll immediately hit the back button which will create bad metrics for your site and, as a result, affect your site’s performance. Also, if search engines have a hard time crawling your site your rankings will suffer as page speed is linked to better rankings.

By making some changes to help speed up your site you can increase rankings and user engagement as well as revenue. Take for example Amazon who increased revenue by 1% for every 100ms of improvement, and so did Walmart.

Here are a few free tools you can use to check how fast your pages are loading:

These tools will analyse your site’s load times and also provide suggestions on making improvements.

Check out some of the suggestions you might receive for a website when you use Google’s PageSpeed Insights tool:

2. Indexability

The first thing you should check is if your website is indexed at all.

An easy way to find this out is to go to Google and run a few searches for:

- your brand name

- your website name and its extension (eg 123-reg.co.uk)

- site:yourdomain.com (also known as the “site:” command)

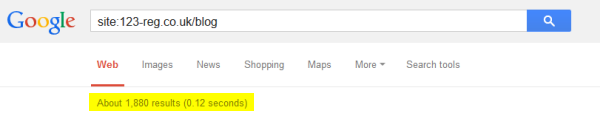

For example, if we run a search on Google for “site:123-reg.co.uk/blog”, we see that Google has indexed approximately 1,880 pages for the 123-reg blog.

This is just a rough estimate. You can also check the number of indexed pages by going to your GWT account -> Google Index -> Index Status.

So, what does this tell us?

There are usually three scenarios:

- The index and actual count are similar (By ‘actual count’ I mean the pages you submitted in your XML sitemap which were indexed by Google). This means that search engines are successfully crawling and indexing your site’s pages.

- The index count is smaller than the actual count. This means that search engines aren’t indexing many of your pages. If it’s a problem of accessibility, go back to the first section and investigate the problem. If not, you might need to check if your site has been penalised by Google. Check out our in-depth guide to find out how to tell if you’ve been penalised and what you can do about it.

- The index count is larger than the actual count. This may mean that you’re either dealing with duplicate content (we’ll explain how to fix duplicate content in the next section) or pages that are accessible through multiple entry points (for example: yoursite.co.uk/blog and www.yoursite.co.uk/blog). For steps on how to fix this, go back to the “Consolidating www and non-www” in the previous section.

3. On-page analysis

One of the easiest ways to improve your website’s SEO is through on-site changes. While it can take time to build high-quality backlinks, on-site tweaks require just a few hours of effort.

URLs

Take a closer look at all your site’s URLs and ask yourself these questions:

- Is the URL short and user-friendly?

- Does it include relevant keywords that describe the content on that page?

- Does it use hyphens to separate words?

For more tips and advice, check out these 11 best practices for URLs from the Moz Blog.

So, the idea is to have URLs that are short, easy to type, while also keeping some sort of organisational structure for the site.

For example:

www.example.com

www.example.com/page-name

www.example.com/category/page-name

Internal linking

Linking your pages together is useful to both search engines and users. When you’re doing your audit, make sure you link related pages together. By doing this you’re ensuring that search engines crawl through and reach every single page on your site. Plus, you’re making it easier for your visitors to find related content.

If you have isolated pages on your site that you’ve never linked to, crawlers may have a difficult time finding and indexing that content.

To find out whether your pages are linked together correctly you can use a free crawler tool like Xenu’s Link Sleuth. This tool will scan your site and help you detect potential holes in your internal link structure.

Meta data

You need to make sure that there aren’t any web pages with missing or duplicate meta titles or descriptions.

Here’s what you should check in detail:

Title tags

The title is the first thing people see in search engine results as well as on social media when you share a specific page on Facebook or Twitter.

So, when you evaluate the titles on your site, make sure they:

- Are short. These should be about 60 characters, however since they’re measured in number of pixels you’d best use Moz’s title preview tool to ensure your title tags aren’t too long.

- Are unique for every page.

- Effectively describe the content found on that page.

- Include your targeted keyword, if possible at the beginning of the title.

In addition, you need to check which pages have missing or duplicate title tags.

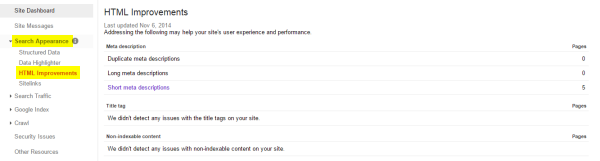

Go to your GWT account -> Search Appearance -> HTML Improvements to see if you have any problems with your meta data:

Meta descriptions

This needs to summarise the content on a page because this too will show up in search engine results together with the title tag. While it won’t help you rank higher, a well-written meta description can have a big impact on whether users decide to click through or not so it should be written to “sell”.

Make sure that each meta description is:

- Unique.

- It includes the keyword you’re targeting on that page.

- It’s between 100 and 150 characters, including spaces.

- It includes a call-to-action that entices people to click.

Alt tags

Naming your images correctly plays a big part in image optimisation. So check to see if:

- All the images on your site include a descriptive alt tag so that search engines can “read” them.

- Your images are named correctly. An image of your company’s logo shouldn’t be named logo.jpg but company name-logo.jpg.

In case you’re not aware, images can drive good amount of traffic through image searches. In addition, by giving an image, like your logo image, a correct name you can also prevent your brand’s logo from being hijacked.

H tags

When auditing your site look out for H tags – if you’re missing any, or you have too many. Ideally, your main headline should be in h1 and then subheadings should use h2, h3… h6. Don’t jump from h1 to h3 as you’ll break the heading structure.

Keyword cannibalism

One important thing that an SEO audit should reveal is whether you have multiple pages on your site that target the same keyword. This is called keyword cannibalism and it’s bad because:

- It creates confusion for the search engines as you have two pages that are competing against each other.

- It creates confusion for visitors.

So if you discover two or more pages that are associated with the same keyword, you can either merge the pages and 301 redirect or repurpose one of the pages to target other keywords.

Duplicate content

Here is a quick checklist for when you audit your content:

- A page shouldn’t have less than 250-300 words.

- The content should provide value to its audience. You can determine that by looking at metrics such as time spent on page and bounce rate in your analytics account.

- The content needs to be optimised for your targeted keyword. However, make sure not to go overboard because then it’ll look spammy and you might also get penalised.

- There are no grammar errors.

- It’s easily readable. Try the Hemingway Editor, it’s my favourite.

Another important thing to look at is duplicate content. Search engines don’t like duplicate content which is even more apparent since the Google Panda algorithm update so you need to do everything possible to identify and resolve any duplicate content issues that your site may have.

To find out if you have content that exists in a similar form on another page or website you can use the Copyscape tool.

You might discover that you have pages on your site with the same or very similar content which you can easily fix by:

- Rewriting it to make it unique.

- Doing a 301 redirect of the duplicate content to the canonical page.

Or you might find that someone else has stolen your content in which case you can follow this advice in order to have your content removed from their site.

4. Backlinks

Look at all the links that are pointing to your site and check to see:

- How many sites are linking to yours.

- If those links come from reputable, authority sites. (If they’re spammy sites with low quality content, they can harm your site’s reputation and SEO efforts.)

- If those links are related to your site’s content.

- If the anchor text on each of those sites appears natural. If too many of your site’s backlinks use the same exact match anchor text such as “24/7 plumbing services in London”, search engines will flag those links as being unnatural.

To get an overview of the links pointing to your site you can either use Open Site Explorer or Google Webmaster Tools.

Make sure you read our guide that explains how to find those low quality backlinks that need to be removed.

5. Social media analysis

These days, the success of your site can also be measured by its ability to engage users and attract social mentions.

So when you’re analysing your site’s social engagement you should be looking at:

- How many likes, retweets, +1s your content is receiving

- How much traffic each social network is sending you.

- Which pages get the most traffic from social networks. You can find these stats in your Google Analytics account.

Here is a collection of tools that you can use to monitor mentions and social sentiment so you can see how you’re performing at building engagement and how your audience feels about your brand.

6. Competitive analysis

I hope you’re not feeling like giving up because it’s time to start the analysis all over, but for your site’s competitors this time.

Believe me, you don’t want to skip this step because it’s how you’ll be able to identify and exploit your competitor’s weaknesses. Check out this beginner’s guide to SEO competitor analysis that explains how to get extremely valuable information and insights on your competitors’ links, content and site structure and how to use all this to your advantage.

Wrapping up

These steps should give you an overview on how well your website is performing from an SEO standpoint. You should also have some good ideas about what things need working on.

Even if it’s a lot of work, try to perform an SEO audit at least once a year. You’ll see, it’ll get easier and easier with every SEO audit you conduct.

Have questions or need further information on one of the steps? Let me know in a comment below.