What’s Robots.txt and How Do I Optimise it for WordPress?

Robots.txt is a tiny file that sits at the root of your domain and helps search engines to understand which pages should (or shouldn’t) show up in search results. And it isn’t something most small website owners need to worry about. If you’re happy with the way the pages of your site currently appear on Google, you can probably leave Robots.txt alone.

Learning a little more about it can offer some insight, though, into how search engines interact with websites. As we’ll go into, there may be certain times when it’s necessary to make changes.

Understanding Robots.txt

What is Robots.txt?

Robots.txt is a simple text file that sits at the root of your domain. It works like a set of guidelines for search engines like Google or Bing, helping them to understand the pages and files on your website.

It’s a useful tool for website owners to control how content is discovered and displayed in search engine results. Specifically, the file tells search engine “crawlers” what to do with your links.

Also known as “bots” or “spiders”, crawlers are automated programs that systematically scan through web pages. Robots.txt provides instructions to these crawlers on which pages to follow and which ones to avoid.

This may all conjure up images of Neo dodging bullets in the Matrix. The reality is more like a pedantic librarian or hallway monitor, telling search engines where and where not to go.

The Robots.txt file forms part of a set of rules called the Robots Exclusion Protocol (REP). These rules help control which parts of a site get visited. While reputable search engines abide by this system, it’s important to note that this file doesn’t enforce these rules. Crawlers from less reputable search engines might choose not listen.

Links: To follow or not to follow

When it comes to optimising your website for search engines (SEO), you can choose from two slightly different kinds of link, depending on how valuable the link is likely to be.

This is important because the authority of linked pages is a factor that search engines use to rank results. Earning links from reputable websites can boost your search ranking.

☐ Dofollow: Standard links as found on most web pages. When crawlers follow, this has the potential to pass on a small amount of authority to a website.

<a href=”www.123-reg.co.uk”>Click here</a>

☐ Nofollow: These links tell search engines not to follow or pass along any ranking power. They’re primarily used for paid ads, user-generated content (like forum comments), or untrusted sources where it wouldn’t be a good idea to pass on authority.

<a href=”www.123-reg.co.uk” rel=”nofollow”>Click here</a>

Do I need to worry about Robots.txt?

For most small website owners, the default Robots.txt settings are just fine — no need to overthink it! The same goes for links: stick with regular (“dofollow”) links to quality websites. With tools like Website Builder or WordPress, links in comments and other user areas are usually set to “nofollow” automatically, which is exactly what you want.

However, it’s still a good idea to familiarise yourself with Robots.txt and understand how it can be used to control search engine crawling on your site.

There are certain cases where it’s necessary to edit Robots.txt. If you’re in control of a larger business website with hundreds of pages, for instance, you may need to guide crawlers towards the most important areas of your website so that search engines (and ultimately users at home) can get to grips with it.

Why edit Robots.txt?

You can use Robots.txt to set which pages search engine robots shouldn’t crawl — or prevent specific bots from crawling your site at all. First and foremost, the file obviously has a big impact on Search Engine Optimisation (SEO). You’ll want to be sure that your most important pages go top in search results.

For large websites, you might wish to priotise the crawling of important pages by disallowing less important ones. This will all work to make sure your most valuable content gets indexed.

If you’re concerned about resource management (ie., server performance) and the amount of traffic coming in, Robots.txt lets you tell crawlers to slow down or focus on specific areas to boost speed.

The file can also play a role when it comes to sensitive data. Robots.txt isn’t a bullet-proof solution, but it can help stop the indexing of admin login areas or other areas you wouldn’t want showing up on Google. It’s also a way to block access to unfinished pages before they’re ready to be seen.

Beyond links, you can also use Robots.txt to dissallow the crawling of image directories that you don’t want to appear in search results.

How does Robots.txt work?

Robots.txt works like a map for search engines, telling them where they can and can’t go on your website. The instructions in the file, like “Disallow” and “Allow,” tell search engines which areas to avoid. For example, if you have an e-commerce website, you can use it to hide certain pages from search engines.

If you have a website, you can see Robots.txt by typing in your domain, followed by forward slash Robots.txt — eg., www.[yourdomain].com/robots.txt

The basic structure of the Robots.txt file is like this:

User-agent: *

Disallow: /admin/

Disallow: /private/

Allow: /public/

User-agent: This line targets all search engine crawlers with the asterisk (*) wildcard. It means the following rules apply to any web crawler that finds this file.

Disallow: /admin/ This line tells search engines not to crawl any pages or files within the website’s “/admin/” directory. This is a common security measure to keep sensitive administrative areas private.

Disallow: /private/ This line tells search engines not to crawl any pages or files within the website’s “/private/” directory. This could be used for member-only sections, user profile pages, or any content you don’t want to appear in search results.

Sitemap: This line (eg., https://www.example.com/sitemap.xml) helps search engines find your website’s sitemap. A sitemap lists all the important pages you want to be indexed, making it easier for search engines to discover your content.

Where do I find my Robots file in WordPress?

Your WordPress Robots.txt file can be found within your website’s files after you’ve connected to your site via FTP. From there, you can manually edit your file to set which robots can crawl your pages, along with the pages they should and shouldn’t crawl.

How do I add/optimise my Robots file?

You can optimise your Robots file via a plugin or via FTP. If you’re not particularly tech-savvy, then you can install a plugin in WordPress to allow Robots. To do this, simply follow the instructions outlined below.

Via Plugin

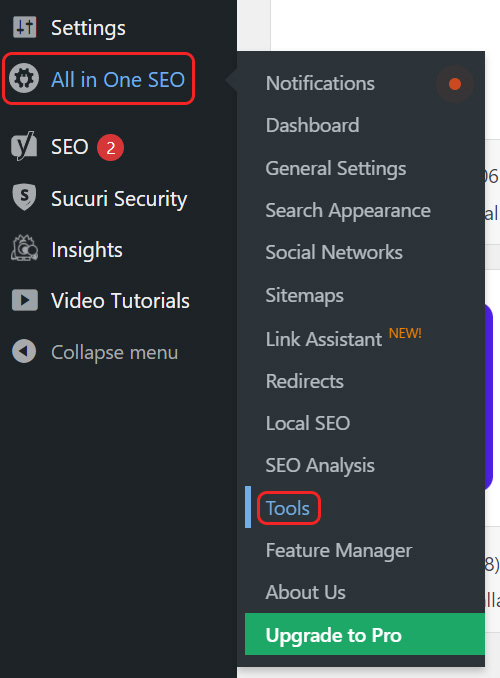

Start by installing and activating a good plugin that’ll do the job for you. We’re fans of All In One SEO — with just a few clicks, you can easily add, remove, or modify directives to control how search engines crawl and index your website.

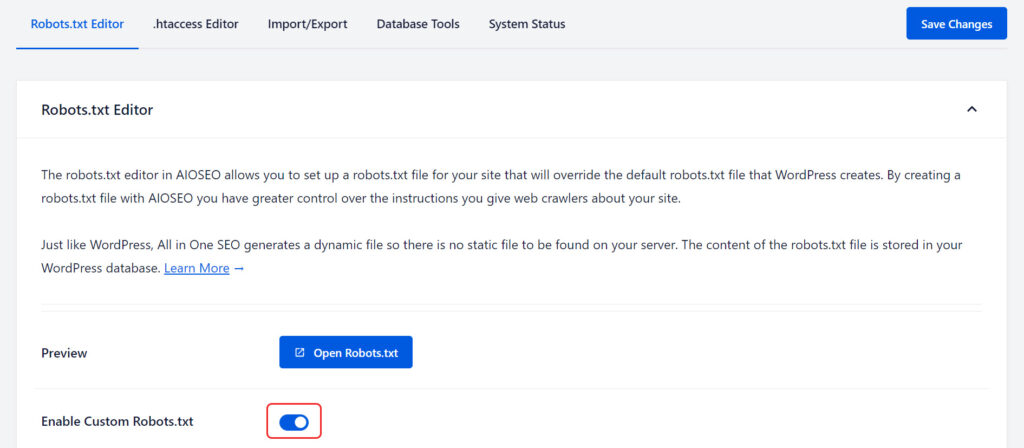

One installed, hover your cursor over All in One SEO in the left-hand menu and select Tools from the menu that appears.

On the next page, you’ll be shown the Robots.txt Editor. Simply toggle the option for Enable Custom Robots.txt to enable Robots.

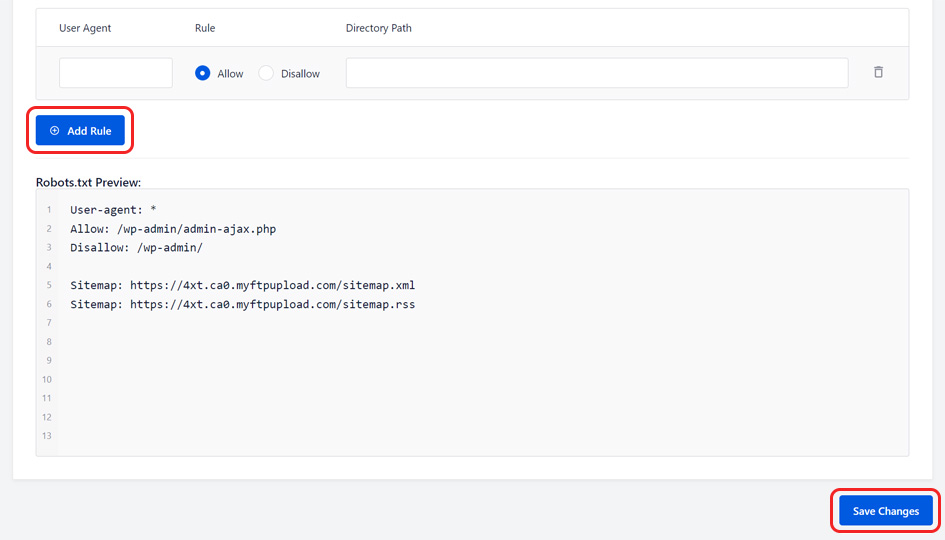

From here, you can add your own rules by entering values into the provided text boxes:

User Agent: which bots you wish to target. If you want to target ALL bots, simply enter *

Rule: set whether you want to Allow or Disallow the rule

Directory Path: set where your rule should be set

Once you’re happy, click Add Rule to insert your rule, followed by Save Changes.

Via FTP

Viewing or editing the Robots.txt file in via FTP will involve accessing your website’s files directly using a program like FileZilla.

Unlike using a plugin, editing via FTP means downloading the Robots.txt file, making changes using a text editor, then uploading it back to your website. It’s a bit more technical and manual compared to using a plugin but gives you direct control.

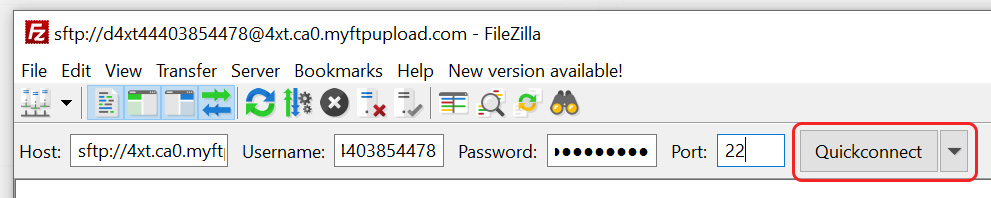

Start by opening FileZilla. Enter your FTP details into the provided fields and click Quickconnect.

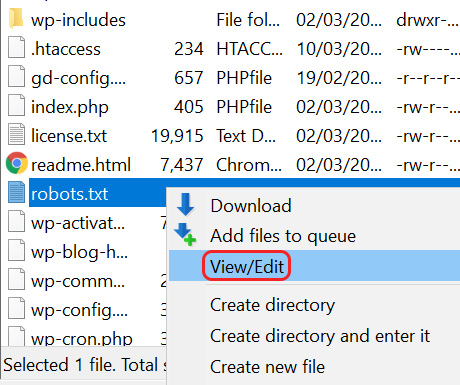

Then, right-click your robots.txt file and select View/Edit.

This will open a Notepad file where you can begin adding/editing your rules:

User Agent: which bots you wish to target. If you want to target ALL bots, simply enter *

Disallow: which pages on your site you want robots to avoid

Be sure to save your file after you’ve made any changes. Double-check that the syntax is correct when adding directives to your robots.txt file.

After making edits to your Robots.txt file, it’s a good idea to test them to make sure they’re working as intended. You can use tools provided by search engines, like Google’s own Robots.txt Tester, to check if it’s all working correctly.

Check out Google’s Robots Testing Tool

Remember: Any small mistake in punctuation or spelling might accidentally block search engines from accessing your entire site. And you certainly don’t want that to happen!

What are permalinks?

Permalinks are the “always there” web address for individual posts, pages, and other content on your WordPress site. Permalinks are referenced in the Robots.txt file. While the content might change, the idea is that the permalinks stay the same.

You can customise permalinks to be clear and descriptive, helping both humans and search engine robots understand what your content is about. Using tidy and structured permalinks can help your site’s SEO.

How do I update WordPress permalinks?

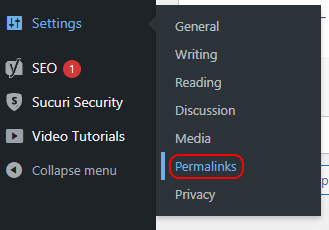

Start by accessing your Managed WordPress dashboard. From there, select Settings from the left-hand menu, followed by Permalinks.

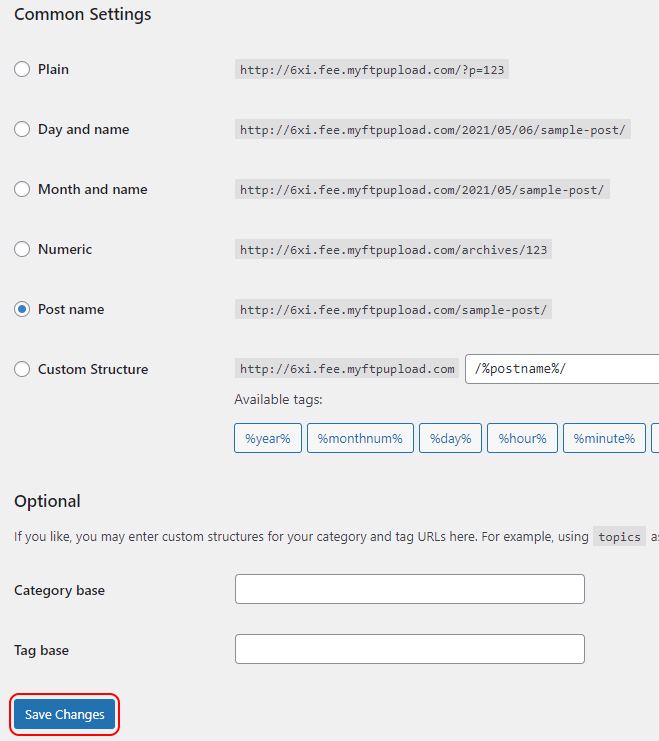

On the next page, you can choose the permalink structure you prefer or create your own custom one. You can also create a structure for your categories and tags. Once you’re happy with your changes, select Save Changes to confirm them.

Click “Save changes”, and the URL on your website’s pages should now display the permalink settings you selected. Done!

You can reset your permalinks at any time. Simply follow the same instructions through to the Common Settings screen, but select “Plain” from the list of options, and save your changes once more.

For more, check out: SEO Essentials — The A-Z SEO Guide

Wrap Up

Understanding Robots.txt can help you make better choices about your website and how it appears in search engines. Though not usually a worry for small website owners, this simple file can help all internet users understand more about how search engines work. It helps improve how your important pages show up in search results and lets you control which parts of your site search engines crawl. For bigger websites, it can help balance server resources and keep everything running smoothly.